Artificial Intelligence Bolsters Physical Security

Machine learning enhances cameras, access control; but robots will not take over anytime soon

In the wake of the May 2018 mass shooting that resulted in 10 deaths at Santa Fe (Texas) High School, the Santa Fe Independent School District looked at all possible options to improve school safety within reasonable financial constraints.

The district considered the idea of technology to enhance its approximately 750 cameras with facial recognition but did not immediately see a workable solution — for reasons of cost, and concerns about shaky accuracy that could lead to false positives, says Kip Robins, director of technology for Santa Fe ISD, which has about 4,500 students.

The district ultimately contracted with a company called AnyVision, which demonstrated its Better Tomorrow product, an artificial-intelligence-based application that plugs into an existing camera network and provides the ability to do surveillance based on a certain face, body or object. School districts or other end users can create a watch list to keep an eye out for potential pedophiles, for example, or someone known to be mentally unstable.

The Santa Fe ISD’s solution is part of a growing cadre of software offerings that use artificial intelligence to power through reams of data and notice certain predetermined visual information – whether it’s someone’s face, or a certain license plate, or simply human movement in a place and time where there shouldn’t be any.

Talk about AI sometimes gets carried away, with some observers exaggerating both its current abilities and its likelihood of being misused, industry experts say (see sidebar). But it seems likely that AI will continue to gain converts in the security space as the technology develops.

Facial Recognition

The “wow factor” for Robins and his staff at Santa Fe ISD is when their system began recognizing people based on 20-year-old photos, or when they were wearing hoodies or glasses – although the accuracy dropped somewhat with the latter, he says. And the potential hit especially close to home when the system was asked to search for one of Robins’ twin sons—and picked the young man out of a crowded hallway before Robins himself did.

“I had to look twice and realize, ‘This is my son,’ ” he says. “I didn’t pick it up, but the software picked it up.”

AnyVision proved affordable for Santa Fe ISD in part because the license agreement enables the district to move the software from camera to camera, adding it at the football stadium on Friday nights, for example, without it needing to be there all week, Robins says. And it adds to the camera network’s ability to keep a close watch on the district’s facilities in a way that human guards alone couldn’t possibly do.

“It’s almost like having a security guard posted on every part of the building,” he says. “It’s impossible for a group of people to watch that many cameras at the same time.”

For reasons related to the Family Educational Rights and Privacy Act (FERPA) and the Health Insurance Portability and Affordability Act (HIPAA), Santa Fe ISD keeps data from the system stored locally rather than on the cloud, Robins says. “We want to make sure our kids are as protected as possible,” he says. “This is just another school record in our mind.”

Robins believes all in all, the system will make another tragedy less likely. “May 18 was our 9/11,” he says. “After 9/11, Homeland Security was put into place. After May 18, our community expected us to do more. The students, the staff, wanted us to do more. It’s about keeping kids safe. This is just a very simple way for us to do it.”

AI for License Plate Recognition

Artificial intelligence-enabled cameras and access control systems can be set up to handle license plate recognition as cars go in and out of parking lots and other facilities. Useful to law enforcement conducting criminal investigations, AI-enabled license plate recognition can be useful to private security as well.

Pierre Schimper, who owns the integrator business Azilsa, Inc., helped a major rental car company in the Midwest install a license plate recognition application called Open ALPR, produced by Rekor Systems. As with Santa Fe ISD and AnyVision, the system has improved accuracy without massively increasing costs, helping to track inventory as vehicles leave the main lot or arrive at an overflow lot the company keeps to handle an influx.

“Different sites in different states can actually communicate,” Schimper says. “The nice thing is, I can use normal cameras. I don’t have to have specialized cameras. It keeps costs down for me and my client. I’m happy, and they’re happy.”

In addition to making sure employees don’t take a two-hour lunch break when they’re supposed to be moving a car five minutes away, the Open ALPR system helps to detect and investigate theft. A few months ago, for example, Schimper’s client had five Dodge Chargers stolen off a lot, but no one knew which ones.

“I was able to pull up the license plate for all of them,” he says. “That makes it way easier from security perspective to see that it has been returned. The nice thing about the system is, we can put up an alert in place. Let’s say they’re looking for a certain vehicle—as soon as the vehicle enters the gate and gets captured an alert goes out immediately. … Because of the volume of vehicles they register, and since a lot of license plates follow similar numbers, humans can make mistakes. This system doesn’t make mistakes.”

Other Use Cases

Vendors of other AI-enabled physical security products see a range of possible use cases, in sectors including retail, banking, healthcare and public housing.

Retailers can accurately count and analyze foot traffic for reasons ranging from employee management, to inventory stocking, to physical security, notes Seagate Technology, which produces the SkyHawk system. Retailers can boost their loss prevention efforts with the kind of secure capture, analysis, transfer, retention and preservation of surveillance data that AI has begun to make significantly more powerful, the company says.

At banks, facial recognition cameras are being deployed at entrances, teller windows and automatic teller machines (ATMs), enabling fraud investigators to quickly garner important evidence, Seagate notes. The systems also can handle motion detection in restricted areas like vaults, providing an alert to guards when an unauthorized person enters such an area.

In the healthcare sector, Charlotte Hungerford Hospital in Torrington, Connecticut, wanted a system that provided appearance search technology to locate a specific person or vehicle of interest. The hospital installed more than 100 cameras equipped with self-learning analytics from Avigilon; and in areas where cameras can’t be installed, Charlotte Hungerford put in a separate product from the same company that handles motion detection with radar. This has improved response time and overall situational awareness.

In a similar vein but a different arena, the New Bedford (Mass.) Housing Authority installed 173 new cameras across its 19 properties from Avigilon as well as motion detection software to track movement and recognize normal vs. abnormal activities. With a focus on areas that have experienced petty crime, the system takes the onus off witnesses who might be reluctant to come forward, by bearing witness itself. The video evidence collected also has assisted the local police force in undertaking its investigations.

AI Myths and Misperceptions

Artificial intelligence has attracted the attention of many within the physical security field for its potential to recognize and send alerts about anything from a particular person being on the premises, to the license plate of a car entering a company’s parking lot. But some have exaggerated ideas about its potential for both good and harm, experts say, which has led to myths and misperceptions, such as:

-

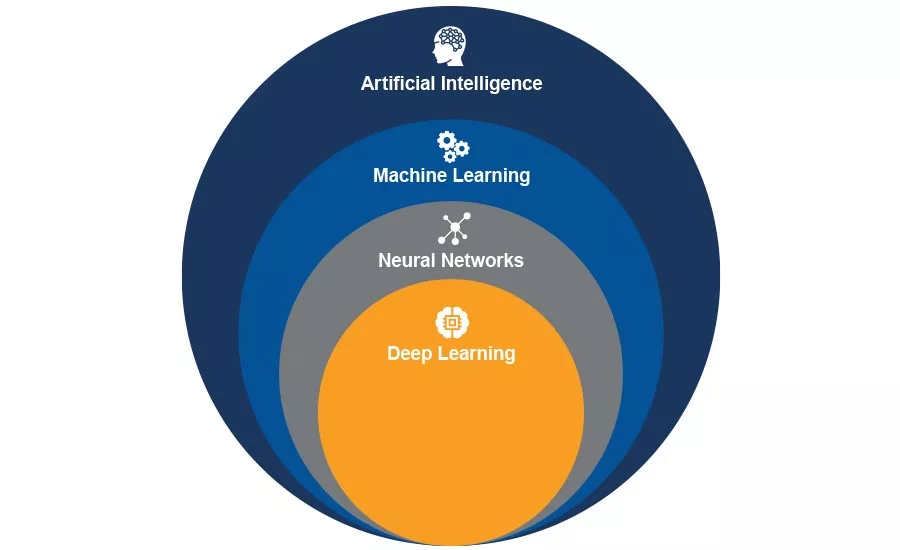

Artificial intelligence is pretty much the same thing as actual intelligence these days. In fact, the current state of AI, better described as machine learning, can be trained for specific purposes and handle algorithms more quickly than a human brain possibly could, but that’s about it, says Matt Hill, chief science officer at Rekor Systems. “It mimics intelligence on very specific applications,” he says. “You can train the software to notice the make and model of a car, and it can be quicker [than people]. But in terms of deductive reasoning, making inferences from that, it’s very limited.” Adds Sean Lawlor, data science team leader at Genetec, “We don’t have anything like [the movie] ‘Minority Report.’ The idea that it’s a solve-all kind of thing, that’s not true at the moment. We’re seeing more and more advances.”

-

AI-enabled camera and access systems have become 100% accurate. Accuracy does continue to improve, says Lawlor, whose company develops security, public safety, operations and business intelligence solutions, like applications to handle people counting and license plate recognition. “A lot of manufacturers are hesitant to say the performance guarantee,” he says. “Facial recognition can be helpful to speed an investigation – but the customer has to be aware, what if it didn’t pick up the face? Those cases have to be handled by humans.”

-

Machines will know when something is wrong, and say so. “It has to be taught what ‘wrong’ is,” Lawlor says. “The marketing is ahead of the technology at this point, in some cases.” Although overall, he believes, “It’s proved to be pretty promising. We’re now seeing solutions that do object detection, or face detection. We’ll see a new phase coming pretty soon where we’ll be combining those together to build a bigger picture. That’s what we’re focusing on now.”

-

Artificial intelligence inevitably will lead to greater profiling based on race and other factors. Given that accurate AI is based on the data upon which the algorithms are trained, and the way that the underlying algorithms are constructed, there are risks," says Max Constant, Chief Commercial Officer for AnyVision. "To avoid those risks, and protect against them, it is contingent upon the designers and developers of this technology to ensure that they build and deliver a solution to the market that is unbiased," he says. "If algorithms are ethically or gender biased, backlash from the market is understandable, but it is important to note that this is a broken algorithm problem, not an AI problem, that is driving fear and misunderstanding.

- Artificial intelligence inevitably lead to a loss of privacy. "This will only happen if the designers and developers of these solutions do not ensure the highest level of compliance with data integrity, privacy tools, ethics and governance standards around artificial intelligence," Constant says.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!