Is H.264 Today’s Next Show Biz Star?

Thanks to H.264, streaming security video can be displayed better. Here video wall software from IndigoVision allows professional fully-featured IP-based control room video walls of any size.

It’s not often that a standard will receive recognition along the lines of an Emmy award. But it did.

The members of the Academy of Television Arts & Sciences recently honored the performance of the Joint Video Team Standards Committee for the development of the High Profile for H.264/MPEG-4 AVC. It enables high-definition images in H.264 video encoding to deliver HD video over satellite and cable TV as well as Blu-ray Disc.

As with introduction of the CCD into the security marketplace after its volume-making success on the consumer and broadcast sides, today’s darling of compression/decompression (codec) has stampeded into security video components and systems. It’s a mostly welcome entry with a few gentle warnings.

The most apparent advantages of H.264 are lower storage costs without loss of image quality, frame rate and retention time and the higher quality images and higher frame rates at the same drive size, retention time and bandwidth.

Warnings center on the fact that adding H.264 adds complexities to the security video system as well as investment in more processing power in cameras, encoders and storage and when it comes to decompressing images for viewing live or retrieved. Then there are the challenges of noise and motion.

Complexities Will Increase

Since H.264 uses a more complex algorithm, it requires more CPU resources to compress and decompress the video signal. The consequences of this, according to Mark Wilson of Infinova, are that the camera requires more power and generates more heat, which, in a harsh environment, may result in reliability issues. Second, since H.264 uses intraframe compression in high-motion scenes, there can be compression artifacts or blur introduced into the video. This affects the forensic value of the video and could cause issues with evidence in court. Yet you shouldn’t see any complexity, according to Tom Galvin of Samsung GVI Security. Instead, there should be a seamless transition and adoption is happening quickly.The “no-free-lunch” bottom line: “In the evolution of technologies, very seldom do you get something for nothing,” surmises IQinVision’s Pete DeAngelis. But, sometimes, the advance is worth it.

For example, H.264 is among the tools in a unique transportation security system under the streets of Seoul, Korea. Uniquely, the design sends live real-time video from moving subway trains to other moving subway trains. Wireless mesh networking in a linear configuration from Firetide fights off radio interference, dust, vibration and metal echoes underground.

The security video system, operated by the Seoul Metropolitan Rapid Transit Corporation (SMRT), when completed this June, will be the first real-time, fixed mobile wireless video surveillance subway system in Korea and the world, costing an estimated $60 million.

SMRT also wanted a system where the operators of the moving trains would have a video of the station being entered. That way the driver could decide not to enter the station in case of accidents or other problems such as a person on the track. The ability to stream video from a station’s video camera to a monitor in a train moving at speeds of 50 mph was critical. In addition to providing video surveillance from the station to train operators, the network will also provide video surveillance of passenger trains to a monitoring center and video streaming of public and commercial advertisements onto passenger train monitors. The H.264 component of the design brought to life the real-time advantages of video streaming.

It’s Been Around a While

Whether viewing the good or challenging, this codec show biz star is no fresh face. Also known as MPEG-4 Part 10, H.264 is a digital video codec standard finalized in 2003 that promises to compress video data to a very low bit rate while maintaining high-quality video. Right now, many video surveillance systems are forced to sacrifice bandwidth and costly network storage. However, if the promise of H.264 is realized, the same resources being used today will be capable of transmitting and storing more video streams with higher frame rates and resolution.But does it live up to its initial reviews? Mostly, say those who have tested its capabilities.

Anixter’s Infrastructure Solutions Lab recently conducted several tests to compare the differences in bandwidth consumption between H.264 and MJPEG video streams using a single camera from a manufacturer that supports both compression technologies.

Anixter’s Infrastructure Solutions Lab recently conducted several tests to compare the differences in bandwidth consumption between H.264 and MJPEG video streams using a single camera from a manufacturer that supports both compression technologies.

The lab found significant differences in network consumption between the two. According to the company, when the camera viewed little or no motion, the H.264 compressed video stream required roughly 10 percent of an equivalent MJPEG compressed video stream’s network bandwidth. In tests with a high degree of motion, the H.264 stream used more bandwidth, resulting in a smaller, but still substantial, difference in network consumption. The biggest potential difference in network utilization is seen in tests with higher frame rates. At lower frame rates, the differences are not quite as large.

The same camera, lens and monitor were used to observe the video produced via the two different compression schemes. In the qualitative assessment by lab engineers, there was a slight difference in the video quality produced using the two different technologies. H.264 video quality was approximately 95 percent as good as the video produced by the MJPEG compression method.

However, the Anixter lab observed that a strobe effect of certain striped or checkered patterns could cause the data rate of the H.264 compressed video stream to increase dramatically over scenes without such patterns. If these patterns consumed a significant portion of the camera’s field of view, they seemed to represent a large area of motion to the camera’s encoding engine and caused the amount of data required to transmit the image to spike. Still, these unusual spikes did not reach the level of resources required to transmit and store an equivalent MJPEG stream.

Sorting out the Hype

Beyond lab tests, there are a lot of claims flying over H.264. Some say that the codec is the most aggressively marketed and least understood of the new technologies in security. But right or wrong, there is a place for it.While chief security officers and security directors now are much more aware of H.264 technology and other IP video solutions, as well as their tech and business advantages, the reality of restrictions in bandwidth, infrastructure and stranded investments in legacy systems is still a significant barrier to implementation. According to Oakley of North American Video (NAV), H.264 includes predicting capabilities (using past, current and next frames) and enhanced motion compensation, all of which enable higher frame rates for recording. It is affordable and suitable for video surveillance applications on a network platform, he says, especially in systems that may contain hundreds or thousands of cameras. It has all the advantages of MPEG-4 but further reduces the file size while providing the same quality of video. This in itself can be a significant bonus for transmitting, recording or archiving video.

From Chips to Firmware

Of course, H.264 capability is available with some manufacturers through a simple firmware upgrade to their IP cameras and encoders. You should ask about frame rate and resolution limitations if you are running H.264 encoding and possible other functions like video analytics on hardware designed for MPEG-4, suggests Bob Banerjee of Bosch Security Systems. On new hardware, ask about the ability to view a low latency stream, and record a higher compressed (but high latency) stream. By the way, in a network, latency, a synonym for delay, is an expression of how much time it takes for a packet of data to get from one designated point to another.There may also be a horse and carriage relationship between megapixel and HD cameras and H.264. Some end-users and integrators believe that the codec is an enabler for the new cameras while others see the megapixel craze as a reason to upgrade to H.264.

And about those noise and motion challenges, there is bad news and good news. No matter the codec, noise is always present; and it is notoriously difficult to compress noise due to its random nature. High levels of noise are typically caused by low-light conditions, requiring larger bandwidth and larger disk storage space to archive.

On the other hand, signal differences due to motion are much easier to compress. The majority of computational efforts is typically concentrated in estimating motion. The goal of motion estimation is to locate blocks of pixels in the current video frame that closely match blocks of pixels in the previous frame corresponding to the portions of the scene that may have moved during the interval between frames.

Estimating Motion is Important

Still there are dangers embedded in the H.264 approach when it comes to motion.

Factors that will cause bandwidth and storage demands to vary widely include wind blowing the trees. As the tree moves in the video, it will increase the bandwidth and storage required. “Imagine having to estimate how frequently the wind will blow before you can properly estimate the amount of storage required for your video surveillance system,” suggests DeAngelis. Rain and snow are viewed by a temporal encoder as nearly 100 percent scene motion. This greatly increases bandwidth and storage requirements. Wind moving the camera’s mount is the worst kind of scene motion because 100 percent of the scene is changing 100 percent of the time. A camera mounted on a pole that could be subject to such disturbances would not be a good choice for H.264 encoding. Speed of the objects being viewed also will impact bandwidth and storage as well as image quality.

How can end users maneuver through the number of cameras, throughput and frames per second of H.264 vs. other codecs?

This is very, very difficult with H.264 compression. With H.264, anytime something moves in the field of view, the bandwidth and storage requirements will go up, by how much depends on how much motion there is and what the desired image quality is. Normal, everyday occurrences, which are almost always in the field of view of a security camera, can cause significant bandwidth and storage problems, says the IQinVision executive.

He adds, some examples of factors that will cause bandwidth and storage demands to vary widely include, (1) Wind blowing the trees. As the tree moves in the video, it will increase the bandwidth and storage required—imagine having to estimate how frequently the wind will blow before you can properly estimate the amount of storage required for your video surveillance system. (2) Rain and Snow are viewed by a temporal encoder as nearly 100 percent scene motion, this greatly increases bandwidth and storage requirements. (3) Wind moving the camera’s mount is the worst kind of scene motion, because 100 percent of the scene is changing 100% of the time. A camera mounted on a pole that could be subject to such disturbance would not be a good choice for H.264 encoding. (4) Speed of the objects being viewed will impact bandwidth and storage as well as image quality.

Is it a myth that there are hidden costs in H.264? Yes, according to Dr. Michael Korkin of Arecont Vision, who reports that because the computational complexity of the H.264 encoder is very high, the required decoder resources must be high as well, many times higher than required for JPEG. The “hidden cost,” as the theory goes, is in the additional computer server power needed to decompress multiple H.264 video streams in a multi-camera security installation in order to display live video from multiple cameras.

In reality, says Dr. Korkin, the exact opposite is true. H.264 streams encoded by some cameras require less computational power to decompress than JPEG streams, a fact that has been demonstrated on brand-name and open-source H.264 software decoders.”

Cost, however, may not be hidden, says Glenn Adair of Panasonic System Solutions, who explains that the H.264 standard is a family of profiles that add a set of extra capabilities to the MPEG-4 standard to improve its ability to compress video. These and other enhancements make for a very efficient compression with improvements over MPEG-4. However, these savings are not guaranteed, he notes. It is important to remember that if a scene is complex with a lot of motion or complex patterns, it is difficult for the encoder to process. There may be little or no improvement over MPEG-4 and even spikes in bandwidth that exceed that of a similar scene in MPEG-4.

Choose Your Level of Enhancement

It is important to remember that not every enhancement is implemented in all instances of H.264, suggests Adair. With several profiles in the standard, most security cameras use the Base Profile, which has been designed for low cost applications such as video conferencing, and does not implement many of the H.264 features when compared with the High Profile, which has become the standard for Blu-ray encoding. In general, the more features included, the more processing power required to effectively implement it. And the higher the price tag.The most important thing to remember, according to Adair, is that H.264 remains an encoding scheme which does not improve the quality of the video. You still need to address your original security concerns. The example often cited is that H.264 may be a better, more fuel efficient truck, but it doesn’t change the quality of the product that is being transported. Care must be taken to ensure that the image produced is worth transporting before you address the how and why of transporting it.

H.264, a derivative of MPEG-4, compresses file sizes more efficiently while maintaining resolution and low bandwidth.

The Evolution of Video Compression

The three most commonly used video recording/compression formats are: M-JPEG, MPEG and H.264. All perform the basic function of compressing the video information by eliminating redundant or irrelevant information. Redundancy reduction looks for patterns and repetitions and irrelevance reduction looks to remove or alter information that makes little or no difference to the perception of the image, according to Jason Oakley, CEO at integrator North American Video.Specifically, M-JPEG (Motion Joint Photographic Expert Group) is a sequence of individual digital still video images that have each been compressed using the JPEG compression format. It is ideal for applications where the recording can be set to a lower frame rate but it does require larger amounts of bandwidth for transmission, adds Oakley.

MPEG (Moving Picture Expert Group) technology in MPEG-4 transmits reference frames (R-frames). The R-frames are data about areas of the scene that have changed since the last I-frame (object-based encoding). MPEG-4 reduces the bandwidth needed by using algorithms to define objects within the field of view and predict motion of those objects between I-frames, according to Wilson of Infinova. This approach offers high video quality compared to the network bandwidth and storage required.

The H.264 compression algorithms are a derivative of MPEG-4 but file sizes are compressed even more efficiently while maintaining resolution and low bandwidth.

Speaking of complexity, there are two important ‘flavors’ of H.264 – Baseline Profile, which compresses quickly and at an acceptable, impressive bit rate, and Main Profile, which takes longer but compresses to an even lower bit rate. Baseline Profile is perfect for live viewing while Main Profile is for recording. In fact, there can be a wide range of profile.

Wireless adds an interesting twist on more robust codec, adds Matt Nelson of AvaLAN Wireless Systems. Still, whatever the means of transmission, H.264 requires more processing power at both the encoder and decoder ends.

Two Lanes, Same Street End

In addition to the standards work through the Security Industry Association, the trade group of manufacturers and service providers, there are two organizations bringing their idea of a flattened playing field to IP networking related to security systems.ONVIF – Founded in 2008 by Bosch Security Systems, Sony and Axis Communications, Open Network Video Interface Forum is the first step toward interfacing network video products. ONVIF aims at global standardization for interfacing network video devices to enable broad interoperability and communication between different products.

PSIA – The Physical Security Interoperability Alliance is a global consortium of nearly 50 physical security manufacturers and systems integrators focused on promoting interoperability of IP-enabled security devices across all segments of the security industry. Participating companies include Adesta, Arecont Vision, Assa Abloy, Cisco Systems, GE Security, Genetec, Honeywell, IBM, IQinVision, Johnson Controls, March Networks, Milestone Systems, NICE Systems, ObjectVideo, OnSSI, Pelco, SCCG, Stanley Security, Texas Instruments, Tyco International, Verint and Vidsys.

Also founded in 2008, PSIA’s objective is to develop standards relevant to networked physical security technology across all segments including video, access control, analytics and software.

By buying into an H.264-based system you may have the opportunity to leverage the ONVIF standard when ONVIF-compliant software, cameras and encoders become commonplace. Make sure that the device has a concrete upgrade path to either ONVIF or PSIA to protect your investment.

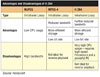

Advantages and Disadvantages of H.264

Hold On: Check Out Intra and Inter, Lossy and Lossless

With any complex technology, there are terms that chief security officers and security directors should know while, at the same time, avoiding the nuts and bolts to be handled by a systems integrator.Image and data compression may be lossless or lossy. Lossless compression results in a bit-for-bit perfect match with the original image and data. Lossy compression results in a decompressed image that is not a 100 percent bit-for-bit match to the original image, but is still useful and ends in much greater compression ratios. Lossy compression is the most popular for video images and lossless is typically used for data or text files.

Other types of compression include intraframe and interframe. Intraframe compresses each individual frame. This type of compression is ideal for streaming video, although it can result in sending the same image over and over, especially if little changes in the scene. Interframe compression, on the other hand, uses a reference image and then only sends updates when changes to the reference image occur, until a new reference image is used.

There are questions to answer when considering H.264.

Resolution – What is your requirement for image quality?

Frame Rate – What do you need to see in the image? What level of detail is required? It is essential to provide the optimal frame rate and achieve the desired storage and archive requirements.

Weather – Is there a lot of snow, rain or wind where the camera is located – all of which can lead to scene motion (motion between frames)?

Lighting – Is the lighting constant or changing? What needs to be seen in low-light conditions? Low-light conditions can lead to noisy images.

Scene Motion – How much motion occurs from frame-to-frame?

Object Speed – What is the speed with which objects move through the field of view?

Camera Motion – Is the camera moving or fixed? How quickly or slowly does a camera pan, tilt or zoom?

Recording – How long are images stored? Is recording constant or based on motion?

Live Viewing – What level of latency is acceptable? Is real-time viewing or multi-camera viewing required?

Bandwidth – How much bandwidth is required to meet your specific requirements? Do any bandwidth limitations exist?

Remote Access – Is remote access to video required?

PC/Servers – What memory and processing power is required to meet your requirements? What PCs are available for viewing video?

Resolution – What is your requirement for image quality?

Frame Rate – What do you need to see in the image? What level of detail is required? It is essential to provide the optimal frame rate and achieve the desired storage and archive requirements.

Weather – Is there a lot of snow, rain or wind where the camera is located – all of which can lead to scene motion (motion between frames)?

Lighting – Is the lighting constant or changing? What needs to be seen in low-light conditions? Low-light conditions can lead to noisy images.

Scene Motion – How much motion occurs from frame-to-frame?

Object Speed – What is the speed with which objects move through the field of view?

Camera Motion – Is the camera moving or fixed? How quickly or slowly does a camera pan, tilt or zoom?

Recording – How long are images stored? Is recording constant or based on motion?

Live Viewing – What level of latency is acceptable? Is real-time viewing or multi-camera viewing required?

Bandwidth – How much bandwidth is required to meet your specific requirements? Do any bandwidth limitations exist?

Remote Access – Is remote access to video required?

PC/Servers – What memory and processing power is required to meet your requirements? What PCs are available for viewing video?

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!